Hello, All

I am currently trying to use robot_localization node in my project.

I run two instances of the node.

First for *ODOM to BASE_FOOTPRINT* transform

with Odometry messages from /odom topic fused.

**ekf_odom_params.yaml**

frequency: 15

sensor_timeout: 0.1

two_d_mode: true

transform_time_offset: 0.0

transform_timeout: 0.0

print_diagnostics: true

debug: false

odom_frame: odom

base_link_frame: base_footprint

world_frame: odom

odom0: odom

odom0_config: [true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false]

odom0_queue_size: 10

odom0_nodelay: true

odom0_differential: false

odom0_relative: false

use_control: false

process_noise_covariance: [1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.5, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.5, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.1, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3]

initial_estimate_covariance: [1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0]

Second instance of robot_localization for [MAP to ODOM] transformation

with Odometry msgs from /odom and Pose from Local Positioning System.

**ekf_map_params.yaml**

frequency: 15

sensor_timeout: 5

two_d_mode: true

transform_time_offset: 0.0

transform_timeout: 0.0

print_diagnostics: true

debug: true

map_frame: map

odom_frame: odom

base_link_frame: base_footprint

world_frame: map

odom0: odom

odom0_config: [true, true, false,

false, false, true,

false, false, false,

false, false, false,

false, false, false]

odom0_queue_size: 1

odom0_nodelay: true

odom0_differential: false

odom0_relative: false

pose0: lps

pose0_config: [true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false]

pose0_queue_size: 1

pose0_nodelay: true

pose0_differential: false

pose0_relative: false

use_control: false

process_noise_covariance: [1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.3, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.5, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.5, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.1, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.3]

initial_estimate_covariance: [1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1.0]

Initial settings I took from project examples, just replaced GPS with my LPS

and removed IMU (no IMU sensor)

Everything is OK until robot starts moving.

After even the minor movement of the robot, Odom frame starts jumping and rolling over the map.

Maybe it's so called "oscillations", but I can't find any

explanation how to correct this.

I tried to follow recommendation to remove yaw from odom. It didn't help.

(and also unclear how robot_localization will calculate yaw, if it's the only source of yaw)

Can somebody help with robot_localization node setup?

I really appreciate any help.

↧

robot_localization node setup

↧

robot_localization loading two odom data in the meantime

Hi Tom/all:

I add another odom data in the ekf_template.yaml, it goes as follows:

odom0: Odom_1

odom0_config: [true, true, false,

false, false, false,

false, false, false,

false, false, true,

false, false, false]

odom0_queue_size: 2

odom0_nodelay: false

odom0_differential: false

odom0_relative: false

odom0_pose_rejection_threshold: 5

odom0_twist_rejection_threshold: 1

odom1: Odom_2

odom1_config: [true, true, false,

false, false, false,

true, true, true,

false, false, true,

false, false, false]

odom1_queue_size: 10

odom1_differential: true

odom1_relative: false

odom1_pose_rejection_threshold: 2

odom1_twist_rejection_threshold: 0.2

odom1_nodelay: false

imu0: imu_rt

imu0_config: [false, false, false,

false, false, false,

false, false, false,

true, true, true,

true, true, false]

imu0_nodelay: false

imu0_differential: false

imu0_relative: true

imu0_queue_size: 5

imu0_pose_rejection_threshold: 0.8

imu0_twist_rejection_threshold: 0.8

imu0_linear_acceleration_rejection_threshold: 0.8

I roslaunch ekf_template.launch in a terminal, and I got information like this , why would it happen?

I do appreciate it if someone can give me some clues.

Error: TF_NAN_INPUT: Ignoring transform for child_frame_id "base_link" from authority "unknown_publisher" because of a nan value in the transform (-nan -nan -nan) (-nan -nan -nan -nan)delta d = -nan

at line 240 in /tmp/binarydeb/ros-jade-tf2-0.5.15/src/buffer_core.cpp

Error: TF_DENORMALIZED_QUATERNION: Ignoring transform for child_frame_id "base_link" from authority "unknown_publisher" because of an invalid quaternion in the transform (-nan -nan -nan -nan)

at line 253 in /tmp/binarydeb/ros-jade-tf2-0.5.15/src/buffer_core.cpp

↧

↧

robot_localization and gmapping with "no map received"

Hi to all,

I'm using Husky A200 with RTK GPS (`/fix`), a SICK laser (`/scan`), a AHRS IMU (`/imu/data`) and wheel encoders.

I use `robot_localization` and `navsat_transform_node` to improve the position of my robot.

The topic `/odometry/filtered/global` contains the robot's odometry with the GPS information.

Since I would like to build a map and use GPS waypoints for the robot's navigation, I'm trying to use `slam_gmapping` with this command:

rosrun gmapping slam_gmapping scan:=scan _odom_frame:=odometry/filtered/global

The problem is that in RVIZ, I get the error: **"no map received**" and so I can only see the path followed by the robot, but no map.

Can you help me to fix this error, please?

I can see the `/scan` laser point in RVIZ without problems.

This is [my bag file](http://www.skeetty.com/bags/test4.bag) (40MB) and this is the launch file I'm using for the robot_localization package:

[false, false, false,

false, false, false,

true, true, true,

false, false, true,

false, false, false] [false, false, false,

true, true, true,

false, false, false,

true, true, true,

true, true, true] [false, false, false,

false, false, false,

true, true, true,

false, false, true,

false, false, false] [true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false] [false, false, false,

true, true, true,

false, false, false,

true, true, true,

true, true, true]

↧

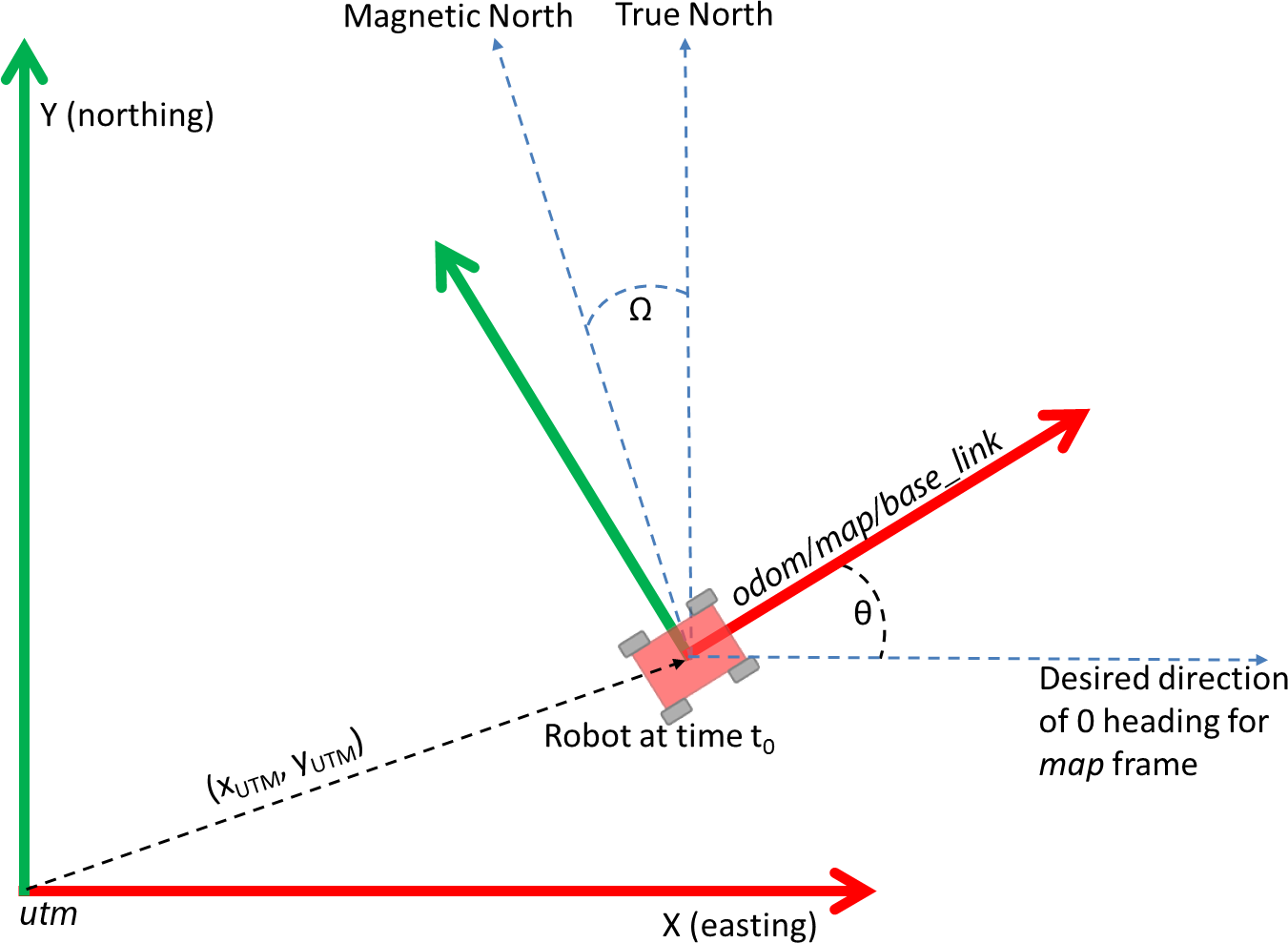

why exist map->odom trasform

HI Tom/all:

Since map frame and odom frame are just tags, they are equal to some degree. What transform map to odom when fusing GPS data for?

I do appreciate it if someone can englighten it to me.

↧

robot_localization incorrect map->utm transform

Hello ,

I have some questions regarding robot_localization package using real map from Google or OSM.

**Settings:**

I have inputs from GPS sensor only - as a start for testing - generating 3 messages from it:

a- gpx/fix message (using lattitude , longitude from GPS)

b- sensor/imu message ( only orientation extracted from GPS )

c- nav_msg/odometry message ( using vx,vy from GPS )

I understand that the robot localization will be very inaccurate. However at this stage I am interested in getting the proper robot_localization settings and able to link the real map from google to the ROS localization (map_frame) and see it on RVIZ (note: google map is used as static obstacle map and I may use it later for goal settings as well. Main purpose of google map is static obstacles)

**The issues / questions are:**

**1-** How to tell robot localization that the origin of the map is at specific UTM co-ordinates?

.................................................................................................................................................

**2-** I assumed that it can be done using datum parameter at navsat transform node as follows:

[30.070131 , 31.020022, 0.0]

I also set the following parameters for debugging.

When i run robot localization , These lines - from navsat transform.cpp - are printed:

[ INFO] [1497450565.937585163, 971.636000000]: Transform world frame pose is: Origin: (0 0 0) Rotation (RPY): (0, -0, 0)

[ INFO] [1497450565.937662238, 971.636000000]: World frame->utm transform is Origin: (-3215764.3632935076021 911887.59913052641787 0) Rotation (RPY): (0, 0, -1.7544863270000004007)

and These UTM coordinates are not close to the map origin UTM coordinates:

(latitude,longitude) = 30.070131 , 31.020022 should result ->(easting ,northing , zone) = 309151.07 , 3328209.18 , 36R

Accordingly on RVIZ the robot is not on the proper place on the map. So **What is the problem?**

...................................................................................................................................................................

**3-** In the datum param. I set the yaw to be 0 as the map is not tilted and the upper border is toward the north. I have no idea about the robot starting position nor orientation. So I set this value with respect to the map not to the robot.

[30.070131 , 31.020022, 0.0]

As mentioned earlier, navsat transform.cpp - is printed:

[ INFO] [1497450565.937662238, 971.636000000]: World frame->utm transform is Origin: (-3215764.3632935076021 911887.59913052641787 0) Rotation (RPY): (0, 0, -1.7544863270000004007)

**Where did -1.7544863270000004007 came from?**

...................................................................................................................................................................

**Robot:** Rbcar robot (at this stage it's irrelevant which robot is used)

**ROS distro:** Indigo in Ubuntu 14.04

**Launch files**: [navsat_transform](https://drive.google.com/open?id=0B1OvxZyDkPTpbHZzeHZYVWI1Z3M) [ekf_nodes](https://drive.google.com/open?id=0B1OvxZyDkPTpcUtrWkJKSWJlVlk) [move_base-map_server-rviz](https://drive.google.com/open?id=0B1OvxZyDkPTpQkhZaDFLaVpVcHc)

**map** : [yaml file](https://drive.google.com/open?id=0B1OvxZyDkPTpZHNTaWZXMlRyWTQ)

**Frame tree**: [frames](https://drive.google.com/open?id=0B1OvxZyDkPTpX09ZTWZaejg1RFU)

**rqt_graph_output:** [graph](https://drive.google.com/file/d/0B1OvxZyDkPTpVmcyaGJlZ1RYWGM/view?usp=drivesdk)

**Example bag file:**

This bag file was recorded while moving around a building one turn until we reached same start point with only GPS sensor (without the robot itself). The bag file was recorded in the same configuration .

[bag file](https://drive.google.com/open?id=0B1OvxZyDkPTpR0FQM0hRQzBhcm8)

[RVIZ screenshot before moving](https://drive.google.com/open?id=0B1OvxZyDkPTpUFdsZlFrek84Nkk) ,

[RVIZ screenshot at the end](https://drive.google.com/open?id=0B1OvxZyDkPTpTTg0OHZWREgyYjQ)

↧

↧

what is the coordinates in robot_localization

Hi Tom/all:

As suggested in robot_localization document and REP 103, the imu data is required to be adhere to frame like "x forward, y left, z up", and odom data should adhere to frame like "x east, y north, z up" (which is ENU coordinates).

Is that so? The relevant input data should go with the coordinates mentioned above or we need to broadcast transform from imuMsg->frame_id to baselink and odomMsg->frame_id to odom.

By the way, what coordinate should GPS data adhere to ?

I have no idea whether I'm right. I do appreciate it if someone can enlighten it to me.

↧

What's the mathmatics formula in robot_localization

Hi Tom/all:

I'm wondering what the mathmatics formula in ekf.cpp file is. It's the core of EKF. But I got confused when looking through those code. Can somebody enlighten it to me? Thank you so much!

↧

Global localization with only VO and GPS

I have a handheld stereo camera and GPS contraption. I use the EKF node in robot_localization with visual odometry to obtain the filtered /odom and the odom->baselink transformation. Now my question is, how can I use navsat_transform + EKF to get map->odom transformation in the absence of an IMU?

↧

Editing /odom message for robot_localization

Hello guys, I have following problem:

I set robot_localization to accept `/odom` messages from Stage simulator and visualize location in Rviz. As far as I adjust the param `` everything goes well (robot moves expectedly, stops when it hits an obstacle - even though the velocity remains constant and I can manipulate robot's position through Stage). `/odom` is the direct output of Stage.

`[true, true, false,

false, false, true,

true, true, false,

false, false, true,

false, false, false] [0.05, 0, ... [1e-9, 0, ...

↧

↧

What is good practice to fuse pose from a unique odom message in robot_localization in both ekf_se_odom and ekf_se_map ?

Hello,

I am fusing odometry from a lidar (I have it thanks to undocumented pub_odometry parameter of hector_slam), IMU and GPS data. I am using the dual_ekf_navsat_example.

My odom message has no twist information, only pose. So that it is taken into account by ekf_se_odom, I need to publish it with frame_id = odom, because world_map of ekf_se_odom is odom.

Then, if I want my odom message to be fused again in ekf_se_map, I need to publish it with frame_id = map, because world_map of ekf_se_map is map.

So... I guess I have to duplicate my odom message... am I right or am I missing something ?

Yvon

↧

how to use process_noise_covariance

I am using a ekf_localization_node from the robot_localization package to track the state of a remote controlled car. As is indicated in [this question](http://answers.ros.org/question/221837/robot_localization-ekf-internal-motion-model/) the filtering is based on an omnidirectional motion model so I would like to tune to process_noise_covariance parameter to give the ekf some information about how the system is expected to behave.

Currently I am setting the x-axis entry in process_noise_covariance to be larger than the y and z-axis entries (I'm assuming the coordinates refer to the base-link frame, but I haven't found confirmation of this). The idea is that this biases the ekf towards forward motion.

Is this the correct approach? Is there anything else I can keep in mind?

↧

When using navsat_transform_node, isn't it a problem to use IMU yaw to compute transform between map and base_link ?

Hello,

In [this video from Tom Moore](https://www.osrfoundation.org/tom-moore-working-with-the-robot-localization-package/) at time 12:36, I can see that he sets IMU yaw to true. The consequence of this is that as soon as the robot is started, there is an non zero angle between base_link and map, with X axis of map pointing to the east (except if robot is facing east of course).

I think there is an inconsistency with what this picture says . I understand here that map and base_link should be aligned at start so that navsat_transform_node can properly compute a transform between utm and map, thanks to the theta angle given by the IMU.

Having map and base_link aligned is mandatory because the IMU reports the angle between base_link and east.

Can you please point me my mistake, and if there is no mistake, how should map and base_link be related when using navsat_transform_node ?

Thank you

Yvon

↧

Error with orientation fusing IMU & GPS with robot_localization

Hi,

I have a GPS and IMU system for a land based robot that I want to fuse using robot_localization. I've configured navsat_transform as mentioned [here](http://answers.ros.org/question/200071/how-to-fuse-imu-gps-using-robot_localization/#200087).

I had a couple of questions:

1. Direction of motion does not match with the display (Rviz). How do I configure the system to get the correct orientation for odometry/gps and odometry/filtered?

2. What could be the cause for the difference in angle between gps and filtered output ?

3. In case of gps jumps, I see that filtered output jumps very far away (from 100,100 to 6000,7000) , odometry/gps still seems to be somewhat on track. Which output should I be using for motion control?

Rviz Output : http://imgur.com/a/0UMBf

Yellow - odometry/gps

Red - odometry/filtered

Data is captured while moving in a counter clockwise circle.

Bag file: https://drive.google.com/file/d/0BxVo6y1UQOweOWtZLXpfZVFxOVU/view?usp=sharing

IMU Setup:

I've tried to convert our IMU into the specs of REP-103 as follows:

1. Acceleration and angular velocity are reported in body frame - X is forward, Y is left and Z is upwards. Counter clockwise movement is positive angular velocity on yaw.

2. Orientation 0 towards magnetic north, so added 90 offset in navsat_transform and ekf_node.

3. I assume not correcting for magnetic declination will give a fixed offset in the readings.

Launch File:

[true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false] [false, false, false,

true, true, true,

false, false, false,

false, false, false,

true, true, true] [0.001, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0.001, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.025, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.025, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.04, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.02, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.015] [1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9]

↧

↧

Integrate UM7 IMU and Adafruit GPS with robot_localization

I'm trying to estimate the position of a robot using the robot_localization ROS package. Currently I use a UM7 Orientation Sensor wich has their own ROS package (um7_driver) and a Adafruit Ultimate GPS Breakout version 3.

To read the GPS data I use the nmea_serial_driver. I read a lot of answers in this page and documents as REP-103 and REP-105, but I'm still not able to configure correctly the nodes and topics to obtain an output in /odometry/filtered or /odometry/gps topics.

I'm usign the navsat_transform_node to convert the GPS raw data (fix) to a odometry/gps message. This odometry/gps message is an input for the ekf_localization_node. In addition the odometry message (output) of the ekf_localization_node is an input for the navsat_transform_node in a circular path as were described in: [How to fuse IMU & GPS using robot_localization](http://answers.ros.org/question/200071/how-to-fuse-imu-gps-using-robot_localization/#200087)

I think that my IMU configuration is ENU compliant. My launchers files are described below with samples of gps/fix and imu/data messages, unfortunately I can't upload an imageof my rqt_graph. Any comments and suggestions will be appreciated!!!

IMU Message Sample:

---

header:

seq: 352

stamp:

secs: 1491248090

nsecs: 477437036

frame_id: imu

orientation:

x: 0.0580077504

y: 0.0024169896

z: 0.9721333587

w: -0.2270291759

orientation_covariance: [0.002741552146694444, 0.0, 0.0, 0.0, 0.002741552146694444, 0.0, 0.0, 0.0, 0.007615422629706791]

angular_velocity:

x: 8.9495899038e-05

y: -0.000212560517745

z: 0.000418925544915

angular_velocity_covariance: [1.0966208586777776e-06, 0.0, 0.0, 0.0, 1.0966208586777776e-06, 0.0, 0.0, 0.0, 1.0966208586777776e-06]

linear_acceleration:

x: -4.19586237482

y: 1.07622469897

z: 8.44690478507

linear_acceleration_covariance: [0.0015387262937311438, 0.0, 0.0, 0.0, 0.0015387262937311438, 0.0, 0.0, 0.0, 0.0015387262937311438]

---

GPS Message Sample:

---

header:

seq: 54

stamp:

secs: 1491248749

nsecs: 594259977

frame_id: /gps

status:

status: 0

service: 1

latitude: -33.0408566667

longitude: -71.6299133333

altitude: 132.4

position_covariance: [3.9204, 0.0, 0.0, 0.0, 3.9204, 0.0, 0.0, 0.0, 15.6816]

position_covariance_type: 1

---

EDIT1: Sorry but I forgot add my launch (configuration) files.

This is my IMU_GPS.lauch

[true, true, false,

false, false, false,

false, false, false,

false, false, false,

false, false, false] [true, true, false,

true, true, true,

false, false, false,

true, true, true,

true, true, true]

↧

Using teb_local_planner with robot_localization

Hello,

I am trying to get the teb planner (for ackermann vehicle) working with robot_localization ( wheel, IMU, & GPS).

Previously I was using teb planner with amcl for localization with good results. When I switched to using robot_localization (dual ekf navsat https://github.com/cra-ros-pkg/robot_localization/blob/kinetic-devel/params/dual_ekf_navsat_example.yaml) the teb planner began behaving erratically.

The odometry/filtered and odometry/filtered_map from the ekf nodes look good in rviz and don't jump around alot.

However the teb planner now is sending my robot in reverse for long distances until it crashes into a wall. Otherwise it sometimes just sends it in a circle.

My main question is, are there settings that need to be changed to use teb planner with the robot_localization package? what should I use for "odom_topic" in the teb planner? Which "global_frame" should I use for my local and global costmaps? If cmd_angle_instead_rotvel is set to true, will that conflict with twist messages coming back from the ekf_node odometry?

Any help would be greatly appreciated. For reference, my (relevant?) teb local planner parameters are as follows:

TebLocalPlannerROS:

odom_topic: odometry/filtered

teb_autosize: True

dt_ref: 0.3

dt_hysteresis: 0.1

global_plan_overwrite_orientation: True

max_global_plan_lookahead_dist: 6.0

feasibility_check_no_poses: 5

max_vel_x: 0.1

max_vel_x_backwards: 0.1

max_vel_theta: 0.5

acc_lim_x: 0.1

acc_lim_theta: 0.5

min_turning_radius: 4.0

wheelbase: 1.8542 # Wheelbase of our robot

cmd_angle_instead_rotvel: True

----------

Update: 1

@ufr3c_tjc thanks for the quick response. I have done as you say and changed my global_frame to odom for both the local and global costmap. It seems you have a very similar setup to mine (dual ekf with one continuous and one discrete gps).

However this change does not seem to fix my problem. The interesting thing I have found is that the teb_planner works fine with the robot_localization as long as I don't set the odom_topic to be /odometry/filtered (ie the output of my continuous ekf). If I leave odom_topic as the default odom (which doesnt exist) the teb_planner works fine. Therefore I believe that something in the teb_planner code is conflicting with the odom information being published by the ekf. Could it possibly be that the teb_planner doesn't expect the velocities to be in the base_footprint frame (ie only instantaneous x velocities?)

Any further ideas? Perhaps @croesmann has an idea?

↧

ros_localization - drift in z when fusing pitch from imu sensor

Hello,

I am currently using ros_localization to fuse imu and wheel odometry in the *odom* frame.

2. Imu0 is obtained from a pixhawk, using the package *mavros*, which outputs the topic */mavros/imu/data*, which has the message type: sensor_msgs/Imu, and provides roll,pitch,yaw, roll vel, pitch vel, yaw vel, x accel, y accel, z accel. I've checked this data in rviz and have confirmed that it is already in the ENU coordinate frame which ROS uses.

1. odom0 is wheel odometry coming from topic */quadOdom* and has the message type: nav_msgs/Odometry. This is a 2D position estimation in odom frame which provides x,y and yaw. The odometry is started at the same heading as the imu so that they are in-line with each other.

When I integrate x,y,yaw from odom0 and yaw from the imu, the EKF filter works fine and the filtered state estimate *odometry/filtered* looks reasonable (although it is only 2D). However when include pitch and roll from the imu, the z value of the *odometry/filtered* drifts by a huge amount, although the x,y and yaw values of the filtered path remain fine. How can i fix this so that when I include pitch and roll from the imu sensor the z value of *odometry/filtered* follows a more sensible path. My launch file is:

↧

robot_localization odom and map drifts

Currently, I’m trying to fuse simulated gazebo GPS and IMU data to get localization to use with navigation stack

I've tested the move_base with perfect odometry given by Gazebo skid steering plugin and there's no problem with that

But when I'm using the fused data from robot_localization my odom and map frame drifts constantly when the robot moves, making it impossible for the planner to make decisions.

Here's a short video with the demonstration:

https://youtu.be/EZv2GhAg0SU

Here's the launch file used for localization:

↧

↧

robot_localization not generating odom to robot's sensor_frame transform

Hello all,

I'm trying to fuse an IMU and a DVL (measures linear velocity on x, y, z). I have the following axis configurations.

- base_link : robot's frame

- base_imu :

imu's frame

- base_dvl : DVL's frame

I have a transform broadcaster which broadcasts base_imu->base_link and base_dvl->base_link. The picture attached shows the frame poses. I have set the fixed frame as base_link here (rviz).

This is the TF tree:

But when I run robot_localization, the localization is perfect but it doesn't show my sensor frames. There are also some warnings generated in rviz (check left pane), saying there isn't any transform to odom frame. But there is a transform as per the tf_tree. Here is a picture:

How do I fix this issue?

↧

Launching robot_localization with Python api

I am using python to launch ROS nodes. I can launch the ekf_localization_node, but cannot seem to pass odom data to the node. In all the examples I have found, the parameters such as odom0, sensor_timeout etc. are set in the launch file within the node namespace. How do you do that in Python ? I am currently using the following script:

def load_params_from_yaml(yaml_file_path):

from rosparam import upload_params

from yaml import load

f = open(yaml_file_path, 'r')

yamlfile = load(f)

f.close()

upload_params('/ekf_localization_node', yamlfile)

But, my node cannot find the /odom data. I set the namespace to "/ekf_localization_node" - tried setting it " and '/' and neither of them worked. I can echo the rostopic /odom and see the odom data. I have the following YAML file:

frequency: 30

sensor_timeout: 2.0

two_d_mode: true

transform_time_offset: 0.0

transform_timeout: 0.0

print_diagnostics: true

debug: false

map_frame: map # Defaults to "map" if unspecified

odom_frame: odom # Defaults to "odom" if unspecified

base_link_frame: base_link # Defaults to "base_link" if unspecified

world_frame: odom # Defaults to the value of odom_frame if unspecified

odom0: /odom

odom0_config: [true, true, false,

false, false, false,

false, false, false,

false, false, true,

false, false, false]

odom0_queue_size: 2

odom0_nodelay: false

odom0_differential: false

odom0_relative: false

↧

Robot localization: GPS causing discrete jumps in map frame

I am using the robot localization node to fuse IMU, encoder, and GPS data. When I view my robot in RVIZ in the map frame it jumps all around to wherever the GPS is saying that it is. In other words it seems that it is not utilizing the encoder data. Anyone had this problem before? Seems like I'm missing something simple. Thanks in advance.

Here is a bunch of info on my system:

**Launch file:**

Note that I am not using the GPS transform node because I am using an RTK GPS system that can provide my location relative to a base station.

[false, false, false,

true, true, true,

false, false, false,

true, true, false,

true, true, true] [false, false, false,

false, false, false,

true, true, false,

false, false, false,

false, false, false] [0.05, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0.05, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0.03, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0.06, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0.025,0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0.025,0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0.04, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.005,0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.01, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0.005] [1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1e-9 ,0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 1e-9 ,0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9 ,0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1e-9] [false, false, false,

true, true, true,

true, true, false,

true, true, false,

true, true, true] [true, true, true,

false, false, false,

false, false, false,

false, false, false,

false, false, false]

↧